Department of Defense

Mission Engineering Guide

Version 2.0

October 1, 2023

Office of the Under Secretary of Defense

for Research and Engineering

Mission Capabilities

Washington, D.C.

Distribution Statement A. Approved for public release. Distribution is unlimited.

DOPSR Case No. 23-S-3518.

Department of Defense

Mission Engineering Guide

Office of the Under Secretary of Defense for Research and Engineering

3030 Defense Pentagon

Washington, DC 20301

https://ac.cto.mil/mission-engineering/

Distribution Statement A. Approved for public release. Distribution is unlimited.

DOPSR Case No. 23-S-3518.

iv

CONTENTS

1.0 _Introduction............................................................................................................................................ 1

1.1 Background ......................................................................................................................................... 1

1.2 Purpose of the Mission Engineering Guide ......................................................................................... 1

2.0 _Mission Engineering .............................................................................................................................. 3

2.1 Overview ............................................................................................................................................. 3

2.2 Mission Engineering Methodology ..................................................................................................... 4

2.3 Considerations ..................................................................................................................................... 6

2.3.1 Digital Engineering in Mission Engineering ................................................................................ 6

2.3.2 Robustness and Transparency Across the Mission Engineering Process ..................................... 6

2.3.3 Reuse––Curation of Data and Products ........................................................................................ 7

3.0 _Mission Problem or Opportunity ........................................................................................................... 9

3.1 Identify Mission and Mission Engineering Purpose ........................................................................... 9

3.2 Determine Investigative Questions ................................................................................................... 10

3.3 Identify and Engage with Stakeholders ............................................................................................. 11

4.0 _Mission Characterization ..................................................................................................................... 12

4.1 Develop Mission Context .................................................................................................................. 13

4.2 Define Mission Measures and Metrics .............................................................................................. 14

5.0 _Mission Architectures .......................................................................................................................... 18

5.1 Mission Threads ................................................................................................................................ 19

5.2 Mission Engineering Threads ........................................................................................................... 20

5.3 Develop Baseline and Alternative Mission Threads and Mission Engineering Threads .................. 22

6.0 _Mission Engineering Analysis ............................................................................................................. 24

6.1 Complete Design of Analysis ............................................................................................................ 24

6.1.1 Develop and Organize Evaluation Framework (Run Matrix)..................................................... 24

6.1.2 Identify Computational Methodology and Simulation Tools ..................................................... 25

6.1.3 Organize and Review Datasets ................................................................................................... 26

6.2 Execute Models, Simulations, and Analysis ..................................................................................... 26

6.2.1 Execute and Analyze the Baseline .............................................................................................. 27

6.2.2 Adjust the Run Matrix (as Needed) ............................................................................................ 27

6.2.3 Execute and Analyze the Alternative Mission Approaches ........................................................ 27

6.2.4 Validate the Fidelity of Analytic Results .................................................................................... 27

v

6.3 Adjust Mission Threads and Mission Engineering Threads ............................................................. 28

7.0 _Results and Recommendations ............................................................................................................ 29

8.0 _Summary .............................................................................................................................................. 31

9.0 _Appendix ............................................................................................................................................. 32

9.1 Mission Engineering Glossary .......................................................................................................... 32

9.2 Abbreviation List .............................................................................................................................. 36

9.3 References ......................................................................................................................................... 38

1

1.0 _INTRODUCTION

1.1 Background

As the Department of Defense works to provide the Joint Force with the necessary capabilities,

technologies, and systems to successfully execute missions, practitioners of mission engineering

can leverage the process described in this guide to help identify and analyze gaps as well as

determine which capabilities, technologies, and systems can improve mission outcomes.

Increasingly, the Department is emphasizing a mission-focused approach to operations and support

activities to ensure resources are aligned to accomplish organizational goals. Mission engineering

is a process that helps the Department better understand and assess impacts to mission outcomes

based on changes to systems, threats, operational concepts, environments, and mission

architectures. Mission-based, data-driven outputs help to inform acquisition, research and

development, and concepts of operation, as well as to “assess the integration and interoperability

of the systems of systems (SoS) required to execute critical mission requirements.”

2

Using

software tools to digitally engineer a mission, the Department can deliver quantitative results that

will improve the quality and robustness of information for decision making.

3

The Office of the Secretary of Defense and the military Components use mission engineering to

identify military needs and solutions, explore trades across the mission, mature operational

concepts, guide requirements and resource planning, inform experimentation, and prototype

selection or program decisions.

4

As the technical subelement that enables Mission Integration

Management (MIM), the mission engineering process also provides inputs to inform portfolio

management decisions.

5

1.2 Purpose of the Mission Engineering Guide

The Department of Defense Mission Engineering Guide (MEG) serves as a key document that

provides practitioners and subject enthusiasts a strong overview and understanding of mission

engineering. This guide includes a detailed explanation of the interdisciplinary mission

engineering process, its key elements, and its associated terminology. The MEG is not a step-by-

step handbook on how to implement mission engineering; rather, the MEG outlines a scalable and

adaptable methodology that can be tailored to address a variety of questions based on scope,

complexity, and time. Specifically, the MEG:

Describes the mission engineering methodology and its main attributes

Provides guiding principles for executing mission engineering and developing rigorous

analytical products

2

Department of Defense Directive 5000.01, Section 1b, “The Defense Acquisition System,” September 9, 2020

3

Author’s note: example tools include physics-based and effects-based simulations as well as model-based or

enterprise architectures, and other digital modeling software.

4

Department of Defense Instruction 5000.88, Section 3.3, “Mission Engineering and Concept Development,”

November 18, 2020

5

Public Law 114-328, Section 855, “National Defense Authorization Act for Fiscal Year 2017,” December 23, 2016

2

Advises best practices and considerations when conducting mission engineering

Informs mission engineering practitioners at different levels of proficiency and from diverse

disciplinary backgrounds about the processes used to conduct mission engineering activities

Defines mission engineering terminology

3

2.0 _MISSION ENGINEERING

2.1 Overview

The mission engineering process decomposes missions

into constituent parts to explore and assess relationships

and impacts in executing the end-to-end mission.

Mission engineering is used to identify and quantify

gaps, issues, or opportunities across missions and seeks

to address these by assessing the efficacy of potential

capability solutions––materiel or non-materiel––that

enhance mission outcomes.

6

Mission engineering results inform decisions on military

requirements, acquisition, research, and development as

well as enable an early shift from qualitative to quantitative analysis. The methodology evaluates

end-to-end mission approaches that include measurable elements amid warfighter-defined, threat-

informed operational contexts. Mission engineering can assess a range of potential solutions––

materiel and non-materiel––within a mission context to inform systems or SoS design and

integration considerations, operational concepts, and trade-offs in Doctrine, Organization,

Training, Materiel, Leadership and Education, Personnel, Facilities, and Policy (DOTMLPF-P),

based on impacts to the mission.

Mission engineering has direct application to systems engineering processes by providing a better

understanding of characteristics, performance parameters, functions, and features of systems and

SoS that have an impact on mission outcomes. The goal of mission engineering is to engineer

missions by identifying the right things (i.e., technologies, systems, SoS, or processes) to achieve

the intended mission outcomes and provide mission-based inputs into the systems engineering

process to aid the Department in building things right.

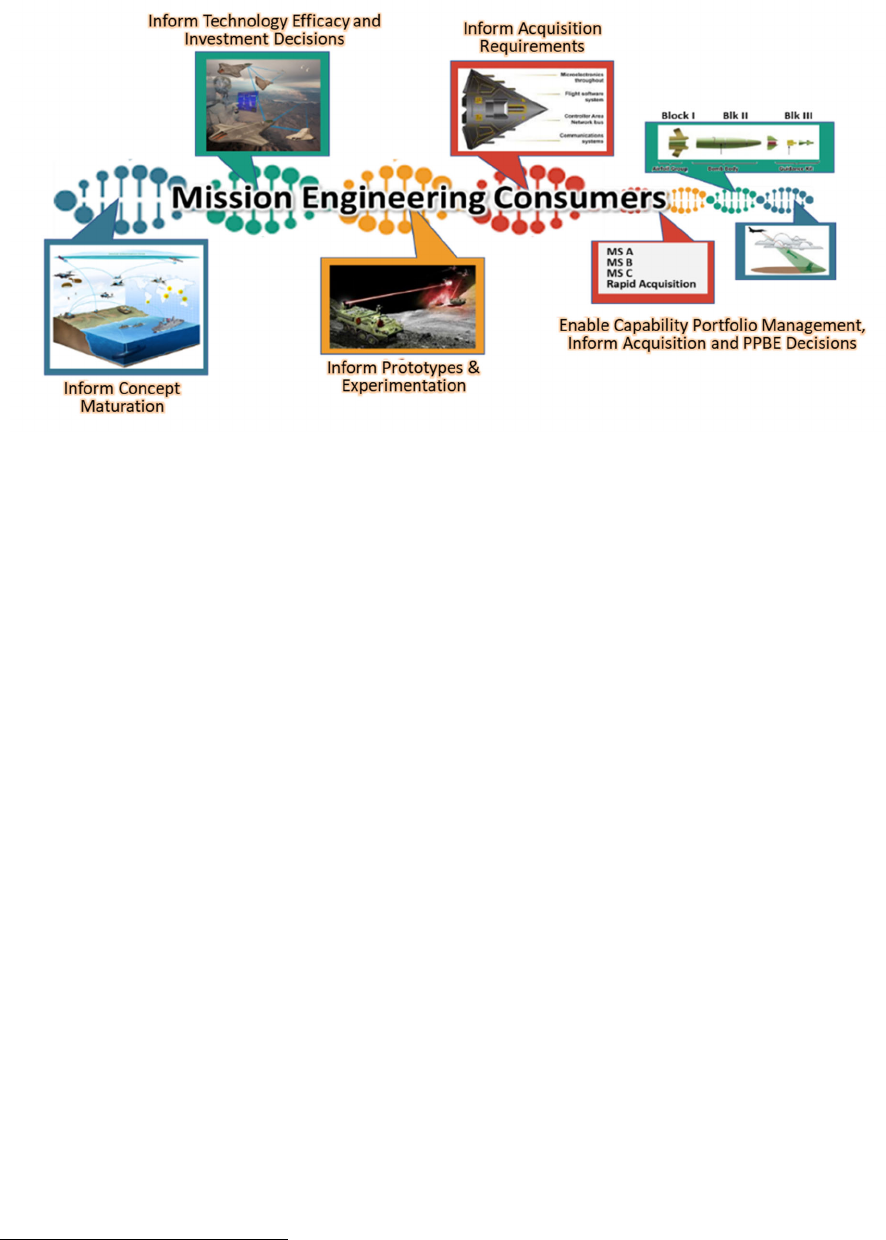

The results of mission engineering are used for a variety of purposes. For instance, findings can

inform technology investments, suggest alternative ways to use current systems, identify mission

gaps and preferred approaches to addressing these gaps, and trigger the initiation of a new

acquisition to meet capability gaps. Mission engineering results may satisfy the requirements for

a Capabilities Based Analysis

7

or provide the starting point for an Analysis of Alternatives.

8

Figure

2-1 illustrates the various consumers of mission engineering products ranging from concepts to

capability development to acquisition.

6

Author’s note: in accordance with CJCSI 5123.01I, “Charter of the Joint Requirements Oversight Council and

Implementation of the Joint Capabilities Integration and Development System,” October 30, 2021

7

Office of the Chairman of the Joint Chiefs of Staff, “Manual for the Operation of the Joint Capabilities Integration

and Development System,” current edition

8

DoDI 5000.02, “Operation of the Defense Acquisition System,” January 7, 2015

Missionengineeringisan

interdisciplinaryprocess

encompassingtheentire

technicalefforttoanalyze,

design,andintegratecurrent

andemergingoperationalneeds

andcapabilitiestoachieve

desiredmissionoutcomes.

4

Figure 2‐1. Consumers of Mission Engineering Outputs

2.2 Mission Engineering Methodology

Missions are tasks and actions undertaken to achieve a specific objective.

9

The mission engineering

process can be scoped based on the complexity of the mission, the functional mission level (i.e.,

strategic, operational, or tactical), the availability of data, and the decisional needs of the mission

engineering activity. The methodology can be adapted according to practitioners’ goals: to

preemptively identify risks and opportunities for change; to resolve identified issues across a

mission; or to explore potential “what if?” changes to the mission and its operational environment.

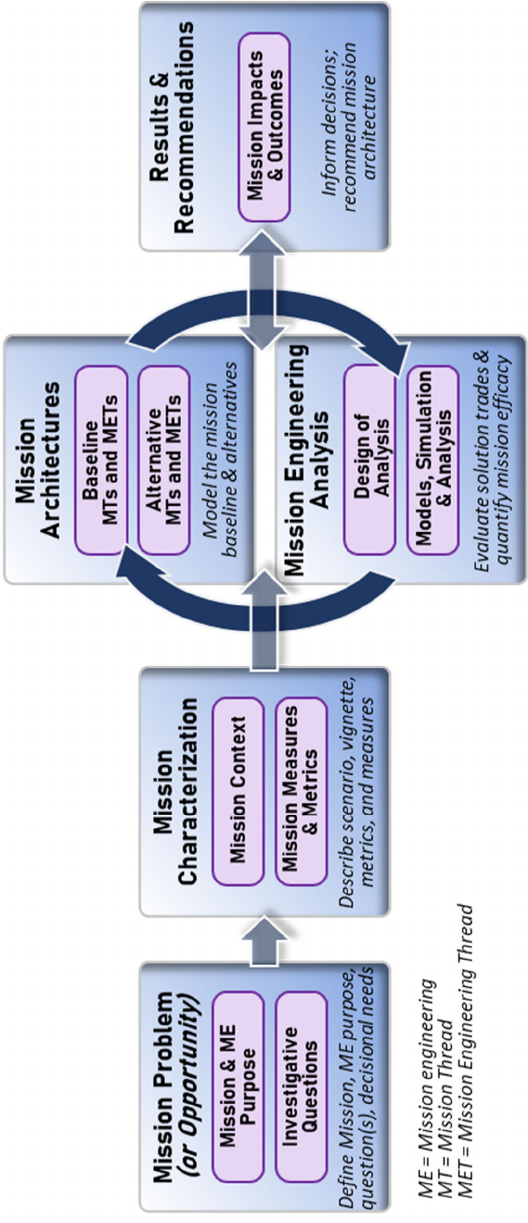

The process illustrated in Figure 2-2 is not necessarily a sequence of discrete steps, but it can be

performed iteratively as more information is gained throughout the process. This methodology

should produce repeatable and traceable results and products that can provide justifiable evidence

to advise decision makers, and it should be leveraged to inform subsequent analyses.

9

Department of Defense Dictionary of Military and Associated Terms (formerly Joint Publication 1-02)

5

Figure 2-2. The elements of the mission engineering process.

6

2.3 Considerations

2.3.1 Digital Engineering in Mission Engineering

Digital engineering principles support a continuum that enables consistency and reuse of models

and data when applied in the mission engineering process.

10

The use of digital representations and

artifacts provides both a technical means to communicate across a diverse set of stakeholders and

the means to deliver data-driven, quantitative outputs resulting in better-informed decisions. With

the use of digital tools and model-based engineering approaches, mission architectures can be

represented as digital mission models within a particular scenario. The use of these tools and

approaches allows for traceability from mission tasks to solutions. These tools also enable the

reuse of products to facilitate updates and changes as needed. Digital linkage and traceability

enable strong configuration management of mission engineering inputs and products. In addition,

the use of general-purpose modeling languages, overlays, styles, and frameworks––e.g., System

Modeling Language (SysML), Business Process Model and Notation (BPMN), Unified

Architecture Framework Modeling Language (UAFML), and Unified Modeling Language

(UML)––enables common understanding, sharing, and reuse of products across the enterprise.

There are a variety of digital tools that can be used to implement mission engineering depending

on the scope, products, and fidelity required. Examples of digital tools include physics-based,

behavior-based, and effects-based simulations, as well as model-based or enterprise architecture

software. These tools provide a quantitative, or computational and logical means, to trace, analyze,

and evaluate a variety of factors that impact the end-to-end mission.

2.3.2 Robustness and Transparency Across the Mission Engineering

Process

The mission engineering methodology is designed to explore multiple options for addressing

mission challenges or seizing opportunities for technology integration across a mission. The

methodology provides a means to compare the effectiveness of alternative mission approaches or

sensitivities around a range of uncertainties to explore the mission space and provide options for

trading capabilities or balancing investments.

To lend transparency to the mission engineering process, practitioners should document

assumptions, constraints, sources of data used, and other factors that drive the results of analysis.

Increased visibility of data, methods, inputs, and factors influencing the design of analysis enables

stakeholders and decision makers to obtain a better understanding of, and confidence in, the

outputs and findings of the activity.

Together, the robustness and transparency of the mission engineering methodology enable

practitioners to obtain a better understanding of each other’s work and increase credibility of the

results.

10

Department of Defense Chief Technology Officer website, “Digital Engineering,”

https://ac.cto.mil/digital_engineering/

7

2.3.3 Reuse––Curation of Data and Products

Without relevant and trustworthy sources of data, completing the mission engineering process may

not even be possible. Based on the complexity and scope of the mission, large datasets may be

needed to characterize the mission and develop the required models for mission engineering—the

development of mission architectures and the execution of mission engineering analysis. The

datasets that support mission engineering analysis include details on the mission and concepts of

operation, operational environment and geographic region, red- and blue-force structures and

orders of battle, and systems or SoS parameters and concepts of employment. A record of other

completed analyses (e.g., engineering-level analyses) that address similar or related topics is

valuable. Practitioners should cast as wide a net as possible across the Department of Defense,

other U.S. Government partners, academia, industry, and the national laboratories for relevant

source data. The collection and storage of data will be an iterative process throughout mission

engineering as more information will be required for different activities and alternative mission

approaches under assessment. Data sources should be credible to ensure validity of the products

developed and that results of appropriate fidelity―i.e., accuracy, precision, and statistical

confidence―are obtained. In the event the necessary datasets are not available, reasonable

assumptions may be required.

Practitioners should consider the following factors when developing datasets for mission

engineering:

Timeliness––When were the data last updated?

Lineage––What is the source of the data? Is the source authoritative?

Fidelity––What is the degree of confidence in the quality of the data?

Validity––Are the data complete? How do the data match agreed-upon definitions?

Linkage––How were the data generated, converted, or collected? With what mission

engineering activity were the data associated?

Storage––How would one catalogue and retrieve the data? With what other datasets are

they topically associated?

Mission engineering adds value to the Department’s engineering, acquisition, and operational

enterprises by facilitating the preservation and maintenance––i.e., the curation

11

––of data products

from current and prior mission engineering activities. Product curation refers to capturing not only

the results and recommendations of a particular analysis, but also to the recording of assumptions,

constraints, sources, models, and data collected. Curation of these elements helps to serve as a

starting point from which subsequent mission engineering activities can be developed.

11

Office of the Undersecretary of Defense for Research and Engineering, “Department of Defense Digital Engineering

Strategy,” June 2018

8

When data is retained, practitioners should clearly

describe the context of the initial use of the data. Doing

so will provide future users with sufficient information

to assess whether reuse of the data is appropriate in a

new context.

Practitioners should consider compiling a library of

models and datasets that are developed and used throughout the mission engineering activity and

should document the source of data. As new information is developed and collected, the data

within the models can be updated to reflect changes in threat, concepts of operation (CONOPS),

and system performance data. The datasets can be generated and collected from wargames,

exercises, developmental and operational tests, experimentation, and demonstrations. For

example, data and results from experimentation provide valuable information on whether the

potential DOTMLPF-P solutions are implementable or have the claimed performance within a

relevant live (physical), virtual, or constructive venue. Over time, properly curated datasets will

yield an increase in the fidelity of the models and results obtained from the mission engineering

activities.

Whilesomegeneralrulesandbest

practicesapply,thepractitioner

willhavetomakeinformed

decisionsregardingwhichdata

assetsareappropriateforreuse.

9

3.0 _MISSION PROBLEM OR OPPORTUNITY

To be effective, the mission engineering process requires thorough planning that focuses on

formulating and agreeing to a well-defined scope for the mission engineering effort. Advanced

planning ensures that the options, parameters, and constraints determined throughout the mission

engineering process stay aligned and can be traced back to the intent of the effort. In addition, a

well-structured plan ensures long-lead-time elements are initiated immediately, and that the overall

implementation and execution of the mission engineering activities are focused and successful.

Therefore, mission engineering begins with defining a clear understanding of its intended purpose,

which, in turn, is informed by a clear understanding of the mission under investigation, its

contextual setting, and its timeframe. Practitioners should capture the purpose in a statement that

synopsizes the mission gaps, problems, or opportunities that drive the effort. This statement of

purpose will inform a set of well-articulated questions that further bound and scope the focus of

the mission engineering activity.

3.1 Identify Mission and Mission Engineering Purpose

Mission engineering starts by identifying two

foundational elements: what is the mission? and what is

to be investigated about that mission? These elements

are crucial to scoping the mission engineering activities

that follow.

The mission engineering purpose—i.e., what is to be

investigated?—can take one of four forms:

Identify potential gaps and quantify shortfalls in the ability to achieve desired mission

outcomes

Explore mission cause-and-effect relationships, or sensitivity analysis, to gain deeper

understanding of the factors affecting mission outcomes

Evaluate trade space of potential solutions to address known gaps within the mission

Investigate mission impact of new opportunities, which can include changes to or the

integration of new technologies, capabilities, or concepts of operations

From the beginning, it’s important to have a clear understanding of what goal or decision will be

informed as this will drive subsequent choices throughout the process. Understanding the

decisional needs focuses the effort to address the so what? of the mission engineering

investigation. These decisions guide the specific questions for the activity as well as the degree of

fidelity and level of analytic rigor needed from the results, findings, and conclusions.

Thepurposeandquestions

boundingthemission

engineeringactivityshould

clearlyarticulateassumptions,

gaps,problems,oropportunities.

10

3.2 Determine Investigative Questions

The purpose statement is amplified by posing one or more key questions that narrow the scope of

the mission engineering effort. Subsequent activities in the mission engineering methodology trace

back to answering these questions. The questions should drive analytic outputs to address the

purpose of the activity—i.e., inform the decisions, trades, and designs. The questions should guide

the selection of alternative mission approaches to be used in the design of analysis and point the

way toward the development of key measures and metrics. As such, these questions should further

refine the fidelity—i.e., the accuracy, precision, and confidence of the analytic outputs and data

inputs. Table 3-1 offers examples of investigative questions aligned with purpose statements.

Table 3-1. Example purpose statements and questions to scope the problem or opportunity.

Example1:Identifycapabilitygaps:Inayear2040BaseDefenseScenariofocusedontheWesternIslands

region,theJointForcewillbeexecutingabasedefensemissionandneedstoachievemissionobjectives(inthis

example,thepurposeistouncovermissioncapabilityshortfalls).

Basedonthecurrentexpected

assetavailabilityandmunitionsinventory,willJointForcebeableto

achieveitsmissionobjectives?

Ifnot,why?WhatarethelimitingfactorsorgapspreventingtheJointForcefromachievingitsmission

objectives?

Example2:Explorecauseandeffect:Inayear2040BaseDefenseScenariofocusedontheWesternIslands

region,evaluatetheJointForce’sabilitytoexecuteabasedefensemissionif25percentofthebluemission

assetsarenotavailable(i.e.,purposeistoinquirehowwelltheJointForcewillmeetmissionobjectives).

Howdoesthemissionoutcomeschangewhenblueassetsarereducedby25percent?

Whatisthesensitivitytoattritionofredasthenumberofblueassetschange(decreaseorincrease)?

Willchangingthenumberofblueassetsincreasetotalsurvivabilityofblueassets?Isthereachangeto

thetotalnumberofweaponsormunitionsexpended?

Example3:Tradesolutionstogaps:Inayear2040BaseDefenseScenariofocusedontheWesternIslands

region,theJointForcelacksPosition,Navigation,andTiming(PNT)capabilitytoperformbasedefensemissions

incontestedenvironments(inthisexample,thepurposeistoevaluatepotentialsolutionsthatclosegapsand

improvemissionoutcomes).

HowisbasedefensemissionsuccessimpactedbyusingalternatePNTtechnologies?

WhatistheperformanceofalternatePNTtechnologiesinadverseenvironmentalconditions?

Example4:Investigateopportunities:Inayear2040BaseDefenseScenariofocusedontheWesternIslands

region,anewcapabilitywillbefieldedtosupportbasedefensemission(inthisexample,thepurposeisto

assessimpactsofmissionwhenintegratingthenewcapability).

IstheJointForcemoreeffectiveinachievingitsobjectivesbyutilizingthiscapabilitycomparedtothe

baselinemissionapproach(theagreeduponstartingpointforhowthemissionwillbeexecutedto

addressthemissionengineeringeffort;drivenbythemission,scenario,andepoch)?

Ismissionsuccessachievedwithreducedweaponexpenditure?Issurvivabilityofplatformsincreased?

Areadditionalcapabilitiesortechnologies(i.e.,enablers)requiredtoemploythiscapabilitysolution?

11

3.3 Identify and Engage with Stakeholders

As the purpose of the mission engineering effort is

developed, practitioners should identify the key

stakeholders who will support the activities.

Stakeholders can be those who are informed by and those

who will use the mission engineering results to support

their efforts or make informed decisions. Stakeholders

can include end-users, sponsors, leaders, and decision

makers.

The identification of new mission capabilities can require the development and alignment of

critical skill sets, subject matter expertise, and personnel resources. Leaders and practitioners

across the enterprise should recognize these needs and coordinate their fulfillment as early as

possible. Effective stakeholder engagement can lead to the identification of subject matter experts

who can support mission engineering efforts with data, information, and the verification and

validation of assumptions.

Stakeholdershelpfocusthe

missionengineeringactivityon

thelevelofconfidenceneededto

addressitspurpose––informing

datacollection,modelfidelity,

andthedesignofanalysis.

12

4.0 _M

ISSION

C

HARACTERIZATION

The statement of purpose and investigative questions are placed in a specific mission context for

analysis. Missions are purpose-specified tasks and actions to achieve specific objectives.

12

Example sources of missions include Joint Warfighting Concepts, CONOPS, and operational

plans. Mission context is very important; the context provides critical variables that can influence

mission outcomes and decisions. These variables that characterize the mission include objectives,

factors associated with operations, and the conditions of the environment. The mission context

should also include enough information from which to derive mission measures and metrics that

address the investigative questions in the statement of purpose. Additionally, the mission context

should help evaluate the extent to which executing the mission successfully achieves the desired

outcomes and end-state.

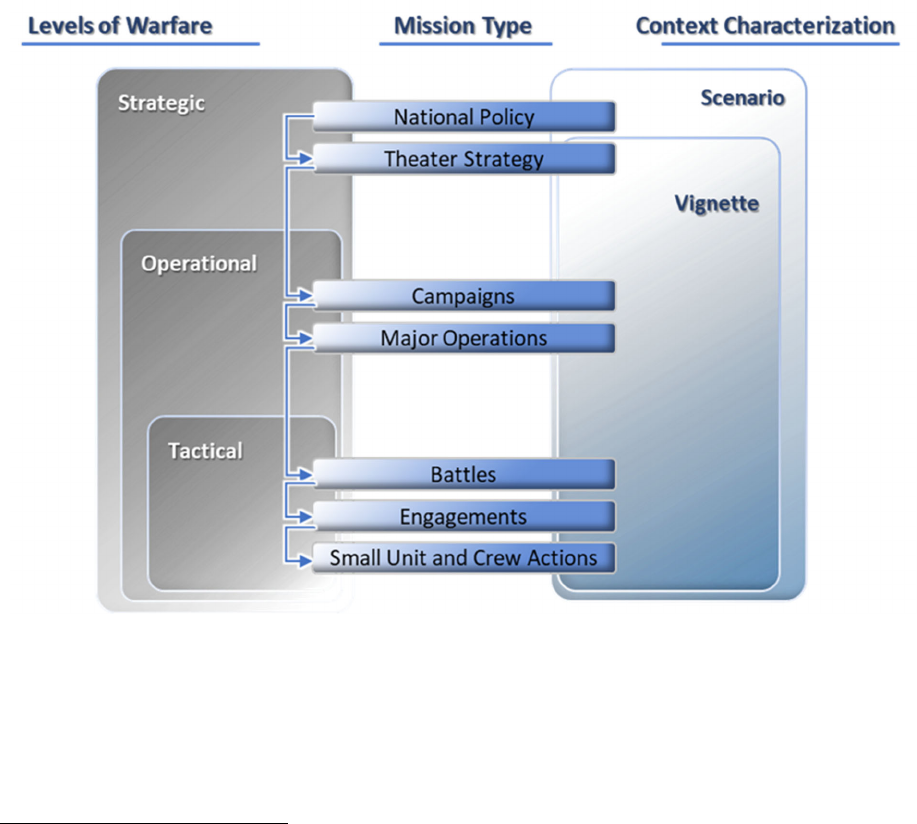

Figure 4-1. Generalized hierarchical and overlapping relationships between levels of

warfare, mission type, and context characterization.

12

Department of Defense Dictionary of Military and Associated Terms (formerly Joint Publication 1-02)

13

The Doctrine for the Armed Forces defines the three levels of warfare as

Strategic, operational, and tactical—[that] link tactical actions to achievement of

national objectives. There are no finite limits or boundaries between these levels, but

they help commanders design and synchronize operations, allocate resources, and

assign tasks to appropriate command. The strategic, operational, or tactical purpose

of employment depends on the nature of the objective, mission, or task.

13

With a tendency to overlap, these levels are generally aligned to the context of both the objective

and mission, which are defined by scenarios and vignettes. See Figure 4-1.

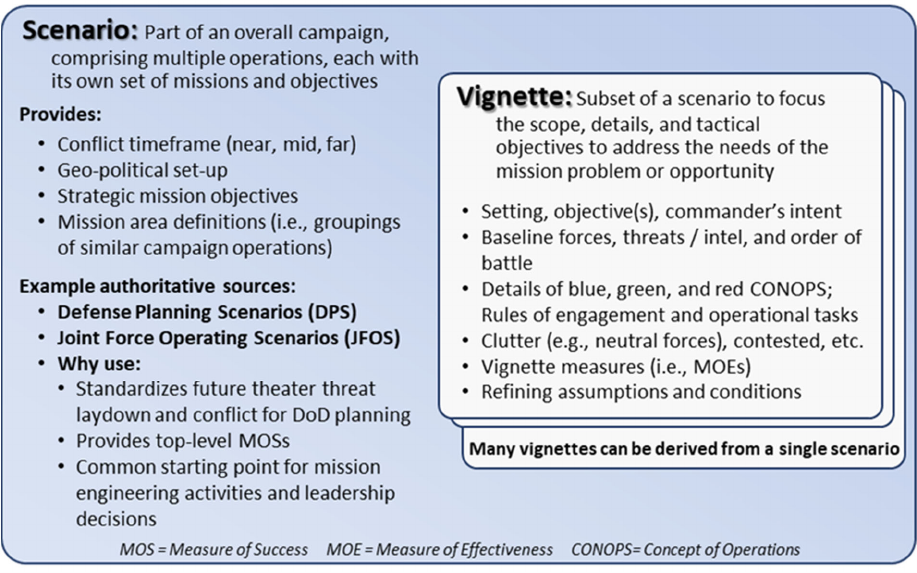

4.1 Develop Mission Context

The mission context is the background setting, conditions, timeframe, operational strategies, and

objectives of the mission that are specific to the focus of the mission engineering effort and to

answering the key questions. The collection of this information is known as the scenario, which is

derived from a campaign. The scenario captures the specific description and intent of the mission,

i.e., its objectives and CONOPS, along with its associated epoch and the relevant operational and

environmental conditions. Conditions are descriptive variables of the environment and military

operation that affect the execution of tasks in the context of the assigned mission. Conditions can

be categorized by the following:

Physical environment (e.g., sea state, terrain, or weather)

Operational environment (e.g., the conditions, circumstances, and influences that affect

employment of capabilities and bear on the decisions of the commander)

Functional elements and their relationships (e.g., forces assigned, threats, command and

control, and timing of action)

Informational environment

These sets of conditions can affect execution and mission outcomes. Scenarios can be decomposed

into smaller subsets of factors, which are referred to as vignettes. Vignettes are more narrowly

framed to concentrate on the most important aspects––the phase or segment––of the scenario

related to addressing the investigative questions. The Defense Planning Scenarios and the Joint

Force Operating Scenarios serve as example source documents that can be leveraged to inform the

development of scenarios and vignettes.

The following are some general considerations when characterizing the mission:

What is the purpose of the mission? The mission objectives describe the commander’s intent

and the conditions, situations, and events that constitute success. The purpose of the mission is

often hierarchical––starting with a strategic goal, segmented into operational objectives, and

then refined into the tactical effects of a given scenario or vignette.

When does the mission occur (i.e., in what epoch or timeframe)? The timeframe of the mission

is important to understand the force laydown—the capabilities, technologies, or systems to be

fielded, deployed, and available—and the operational plans and policy implications.

13

Joint Publication 1, “Doctrine of the Armed forces of the United States,” March 25, 2013

14

Where is the mission happening? What geographic and geopolitical settings are relevant to the

mission? The location of the mission describes not only where the mission takes place (e.g.,

theater, area of operations), but also what geopolitical considerations are relevant to its

execution.

Who is involved (i.e., combatants and noncombatants; friendly, hostile, and neutral forces)?

The description of available forces should include blue (U.S.), green (allies), white

(noncombatants or neutral), and red (adversary) forces as well as orders of battle.

How is the mission executed? The sequence of operational events that will take place to execute

the end-to-end mission (i.e., mission approaches).

Figure 4-2 shows a framework for organizing key elements of the mission context, including the

relationships among characterizing elements, objectives, environments, assumptions, and

constraints that impact mission approaches and systems to be modeled. Practitioners should

document assumptions, constraints, and other limitations that bound the mission context.

Figure 4-2. Overview of Mission Characterization.

4.2 Define Mission Measures and Metrics

Mission measures and metrics are the means to assess the end-state or goals of a given mission

approach and evaluate the elements contributing to mission outcomes. Measures and metrics are

selected from repeatable and unambiguous objective values and threshold values, which most

directly inform the investigative questions and decisions to be addressed by the purpose statement.

15

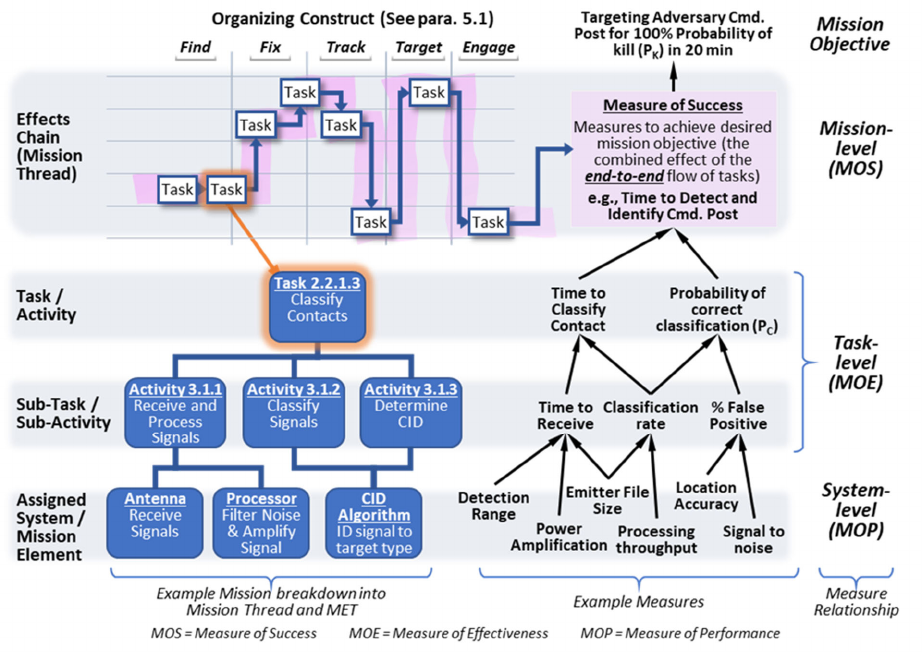

Measures of Success (MOSs)––measurable attributes or target values for success within the

overall mission in an operational environment that are typically driven by the mission objectives

of the blue force.

Measures of Effectiveness (MOEs)––measurable military effects or target values for success that

come from executing tasks and activities to achieve the MOS.

Measures of Performance (MOPs)––measurable performance characteristics or target parameters

of systems or actors used to carry out the mission tasks or military effect.

For the purposes of mission

engineering, there is a hierarchy of

measures and metrics which provides a

logical decomposition of the ends and

means to accomplish the overall

mission objective and its related tasks.

Typically, there is an overall

operational objective to be evaluated to

determine whether a mission is deemed

successful or not. Measures of success

help quantify this objective, support the

purpose statement, and answer the

investigative questions. These

measures should help to quantify

impacts to the mission outcomes or

end-state. Measures of success could be

derived from source documents

describing specific missions and

scenarios, such as the Defense Planning

Scenario. An example MOS is the Joint

Force shall defeat 70 percent of the

adversary fleet in less than five days.

One or more MOEs help to characterize

the MOS. Measures of effectiveness

provide a means to assess and evaluate

various actors in the execution of their

tasks. Changes to MOEs (e.g.,

improving a given capability) can result in observations and help build understanding of the

sensitivity correlating to the MOS. An example MOE is the number of red assets destroyed and

the number of targets tracked.

14

Air Force Doctrine Publication 3-0, “Operations and Planning,” p. 89, November 4, 2016

ExampleMOSs,MOEs,andMOPs

InaJointForcemissiontostopamajorenemy

groundoffensive,thesuccessofthemission(defined

byMOSs)couldbeassessedbymeasuringthearea

ofthebattlespacestillunderfriendlycontrol.Ifthe

arearemainsunchanged,thentheenemy’s

offensivehasbeenstopped,andthemissionhas

beenasuccess.

AJointForceAirComponentCommander(JFACC)

mightassessmissioneffectiveness(definedby

MOEs)bymeasuringhowmanyofthetargeted

enemyforcescontactedfriendlyforcesincoherent

platoon‐sizeorlargerformations.Ifthatnumberis

small,protectingfriendlytroopsandeffectively

bluntingtheenemyoffensive,[then]theJFACCmay

concludethattheblueforces’effortswere

effective—andthattheydidtherightthing.

TheJFACCmightassess[blue]forceperformance

(definedbyMOPs)bymeasuringthenumberof

interdictionsortiessuccessfullyflownagainstenemy

follow‐onforces.Ifblueforcesflewtheplanned

numberofsortiesormorewithoutloss,theJFACC

canassessthatblueforcesaredoingthingsright.

14

16

The attributes that characterize the specific performance of each actor—i.e., capabilities,

technologies, systems, and personnel in the mission approach—represent one or more MOPs.

Measures of performance are typically measurable data points that are collected and serve as inputs

to support the development and execution of the mission engineering activities. As MOPs are

tracked, there may be correlating changes to the MOEs and MOSs. Example MOPs include missile

speed, range, maneuverability, warhead size, lethality, and survivability.

Measures and metrics should be selected and scoped to the statement of purpose. Measures and

metrics will evolve as factors across the mission becomes more fully understood and as various

potential solutions are investigated. Relevant measures and metrics emerge after identifying: 1)

the purpose statement, investigative questions, and decisional needs; 2) the mission and its

objectives; and 3) the mission approach’s tasks and assigned actors. A high-quality MOS aligns to

the mission of interest and investigative questions associated with the purpose statement. The

MOEs and MOPs are defined and collected, as needed, in direct contribution to the MOS. The

MOEs and MOPs help to explain whether the MOS is being achieved and the factors contributing

to its achievement.

The data and observations gained from obtaining the selected measures and metrics should be

preserved for future analysis, potentially to inform revised baseline mission approaches or to help

accelerate follow-on efforts given what has been previously learned. High-quality measures and

metrics have the following characteristics:

Consistent and repeatable––to grade across subsequent iterations, trades, and alternative

mission approaches

Relevant and necessary––addresses the purpose statement

Solution agnostic––unbiased toward a specific mission approach or solution

Measurable––represents a scale, either directly observed or derived

Figure 4-3 illustrates the linkage of measures and metrics from the system level to the mission

objectives. The MOEs and MOPs connect through the mission architecture—from tasks to

systems, up to the MOSs. This balance ensures the use of valid measures and metrics in the

analysis.

17

Figure 4-3 Linkage of measures and metrics from the system or system of systems to mission

levels.

18

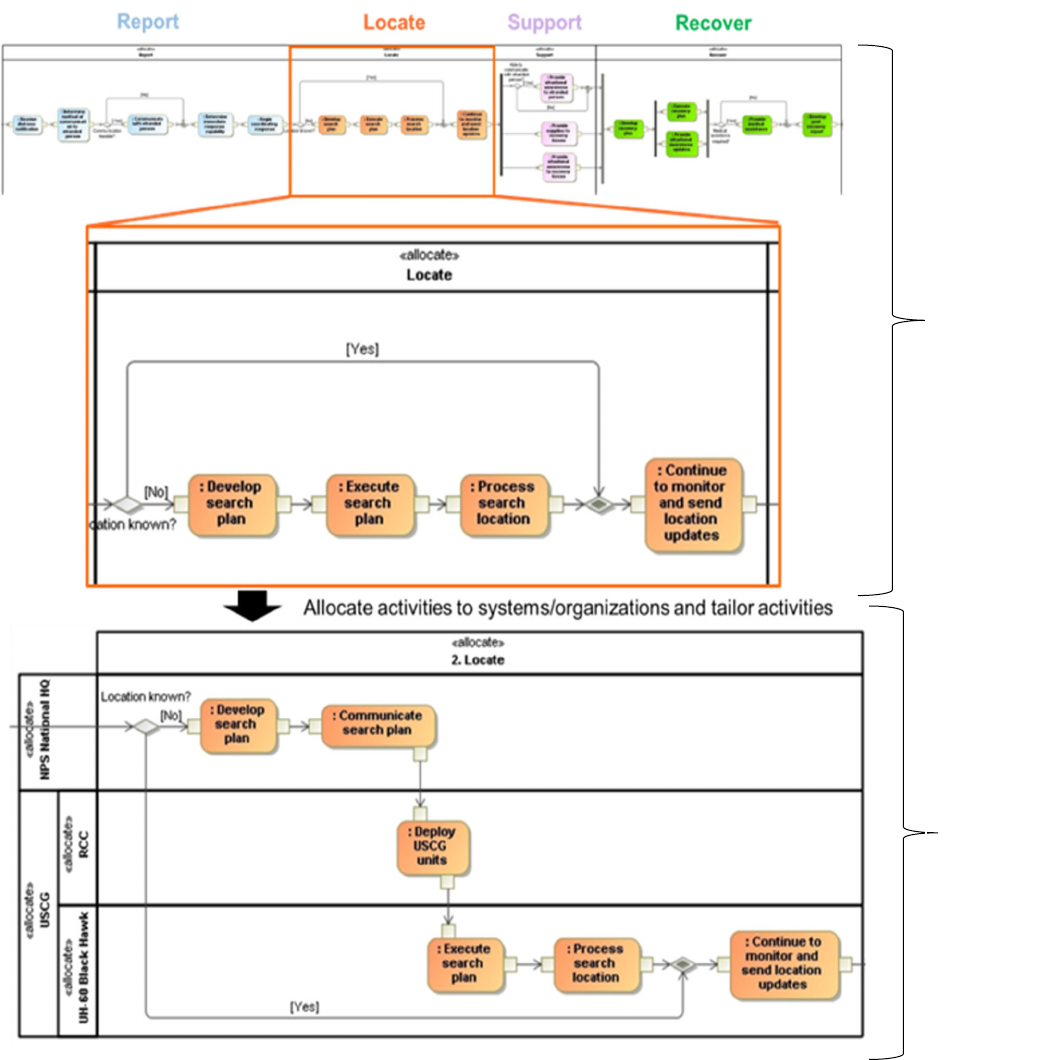

5.0 _MISSION ARCHITECTURES

The architecture of a mission captures the structure of what activities, tasks, and events are

essential to the mission and how these activities are executed to achieve end-to-end mission

objectives. Mission architectures capture the relationships, sequencing, execution, information

exchanges, DOTMLPF-P considerations, and nodal linkages of elements within the mission.

Mission architectures provide a bridge between military operations on one side and functionality

on the other. In addition, mission architectures should reflect tactics and timing to complete the

necessary tasks to achieve mission objectives.

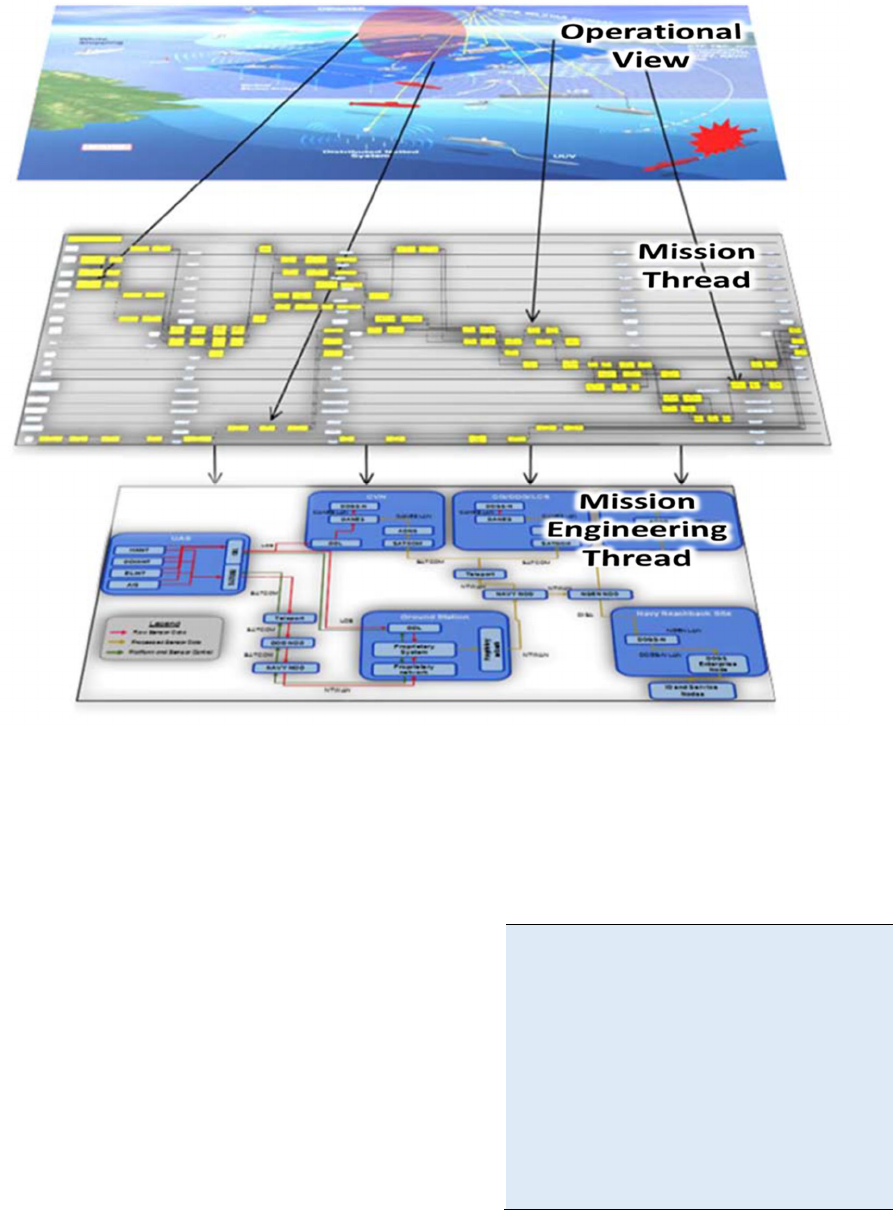

In mission engineering, there are two key elements of mission architectures: 1) mission threads,

which capture the activities of a given mission approach, and 2) Mission Engineering Threads

(METs). These elements capture how the mission activities related to the actors, systems, and

organizations are executed in a specific mission context captured in the scenario and related

vignettes (See Figure 5-1). A mission architecture can be thought of as an interwoven effects web,

or kill web, comprised of many mission threads and METs.

Mission architectures provide the means to compare alternative mission approaches to conduct a

mission against a baseline mission approach. The models and data used to digitally represent

mission architectures should be tailored to suit the level of detail required to address the purpose

statement, investigative questions, and specific mission context of interest. The derivation of

mission threads and METs is an iterative process.

There are multiple ways to document a mission architecture and several notational approaches that

can be used to describe mission threads and METs, including BPMN, UML, SysML, UAFML, or

other Department of Defense standard architecture views.

19

Figure 5-1. Relationship of elements in a mission architecture from an operational view

(top, scenario), through an approach (middle, mission thread), to the assignment of actors

and systems (bottom, MET).

5.1 Mission Threads

Mission threads characterize the sequence of events,

activities, decisions, and interactions in an end-to-

end mission approach to achieve an operational

mission objective. Mission threads are distinct in that

they describe the task execution sequence in a chain

of events, not how or by whom each activity within

the flow is to be accomplished.

There is no single source for mission threads;

however, ample resources exist in the Joint Staff,

Services, and Combatant Commands––discussion

with stakeholders and subject matter experts from

these organizations is critical and will help mission engineers properly characterize missions and

develop mission threads. As inputs for mission thread development, practitioners may consider

MissionThreadsdescribeasetof

tasks,activities,andeventsinan

approachtoconductamission.

MissionEngineeringThreadsassign

theactors—people,systems,

organizations,etc.—thatperform

thetasks,activities,andeventsin

theapproachtoconductamission.

20

resources like the Joint Mission Essential Task List (JMETL), the Unified Joint Task List (UJTL),

the Joint Common System Function List (JCSFL), and Service-specific task lists.

In context of a specific scenario, mission threads

describe tasks to be executed, leveraging doctrine,

tactics, techniques, procedures, and associated decision-

making cycles as well as any deviations from the Joint or

Service task lists.

Organizing tasks into a sequential description of the

mission approach is useful. There are several broad constructs that can serve as starting points,

such as the task flow for a long-range fires (kinetic) mission thread may take the form of Find–

Fix–Track–Target–Engage–Assess (F2T2EA). Alternately, logistics and other supporting missions

may take a different construct––for instance, a cyber (non-kinetic) mission thread may follow the

task flow of reconnaissance, weaponization, and delivery, i.e., Exploitation–Installation–

Command and Control (C2)–Action on Objective.

5.2 Mission Engineering Threads

The development of one or more METs will complete the representation of a given mission

approach by adding the details on the actors––systems, technologies, organizations, and

personnel––necessary to accomplish the mission tasks. METs provide insights that inform

engineering designs and development considerations for systems and SoS.

The level of detail provided in a MET should be tailored to the purpose statement. Not all tasks in

the mission thread need to be assigned an actor. Practitioners may make some assumptions

regarding activities and events that are not central to the investigative questions––as long as these

assumptions are explicitly documented. As with mission threads, practitioners should validate

METs with stakeholders and subject matter experts.

Figure 5-2 depicts the relationship between an individual mission thread and a MET. In practice,

the execution of a mission in a specific scenario may include the integration of multiple mission

threads and METs as effects webs or kill webs. Practitioners should ensure that a complete set of

threads and its associated relationships are represented in the mission architecture based on the

purpose and scope of the mission engineering effort.

Practitionersshouldvalidate

derivedmissionthreadswith

stakeholdersandsubjectmatter

experts.

21

Figure 5-2. Example of a single mission thread and associated MET modeled in digital

engineering tool.

Mission

Thread

MET

22

5.3 Develop Baseline and Alternative Mission Threads and

Mission Engineering Threads

The mission threads and METs against which alternative mission approaches will be assessed are

called the baseline mission approach. Typically, the baseline is a starting point, or an initial

approach to the mission for the epoch (present or near future) of interest. When considering new

ways to improve mission outcomes, those changes should be represented by adding the modified

activities to the mission threads or by integrating the new technologies or systems to the METs.

These changes become alternative mission approaches. These alternatives are excursions from the

baseline mission approach, directly derived from the purpose statement and the investigative

questions. For example, if the mission engineering purpose is to explore cause and effect, then the

alternatives could be driven by a sensitivity to a particular parameter. If the purpose is to address

potential solutions or new opportunities, then the alternatives could model the implementation of

the driving forces behind those opportunities. In addition, alternatives can be chosen based on

known issues or gaps identified from the baseline. For each alternative, practitioners may need to

develop and validate separate METs (and possibly mission threads). Each baseline and alternative

MET should be clearly documented with controlled configurations. The documentation should

reflect any associated changes, including traceable sources of information and references.

To be most effective in interpreting results of the mission engineering activity, practitioners should

clearly understand and capture the changes being made between the baseline and alternative

mission approaches. Depending on the scope of the mission engineering activity, changes could

be focused on a single system or SoS within the baseline. Alternative mission approaches may

become new baselines for subsequent mission engineering efforts.

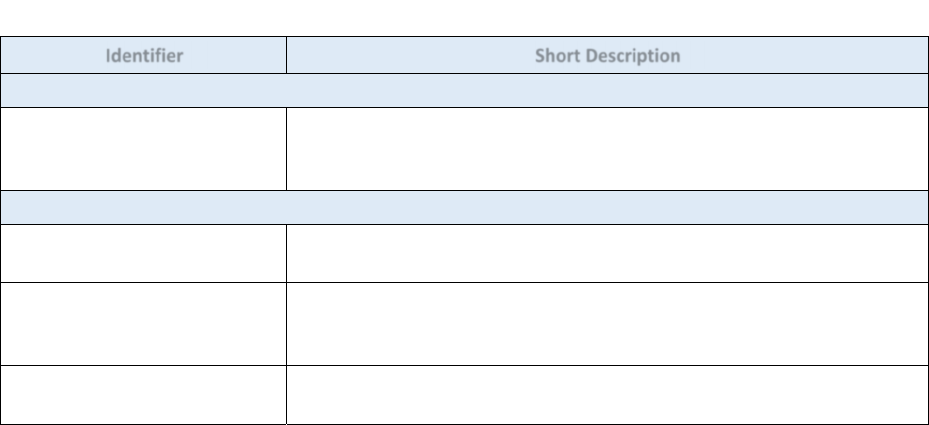

For illustration, Table 5-1 provides examples of baseline and alternative mission approaches when

considering an opportunity statement that is focused on understanding the mission impacts of

integrating new weapon systems with associated enhancements and enablers.

Table 5-1. Approaches to be examined

Identifier ShortDescription

BaselineApproach

A. ConventionalApproach EmployGlobalPositioningSystem(GPS)‐guidedstandoffmissilesfromblue

forcebomberaircraft,supportedbyjammersandaerialrefueling,toattack

redforceaircraft

AlternativeApproaches&Excursions

B1.Bomber‐launchedglide

vehiclewithGPS

Substitutenewbomber‐launchedglidevehicleweapon,GPS‐guided

B2.Surface‐launchedglide

vehiclewithGPS

Excursion:Substitutelaunchplatformtoemploysameglidevehicleweapon

fromapproachB1,butlaunchedatextendedrangefromSurfaceShip,GPS‐

guided

C.Bomber‐launchedsubsonic

cruisemissilewithGPS

Substitutenewbomber‐launchedsubsoniccruisemissile,GPS‐guided

As already noted, mission architectures include mission threads and METs executed in the

specific mission context. The interdependencies revealed across this mission architecture

23

underscore the importance of reflecting all METs applicable within the scenario. This set of

integrated METs should be traceable to the mission engineering analysis and can serve as the

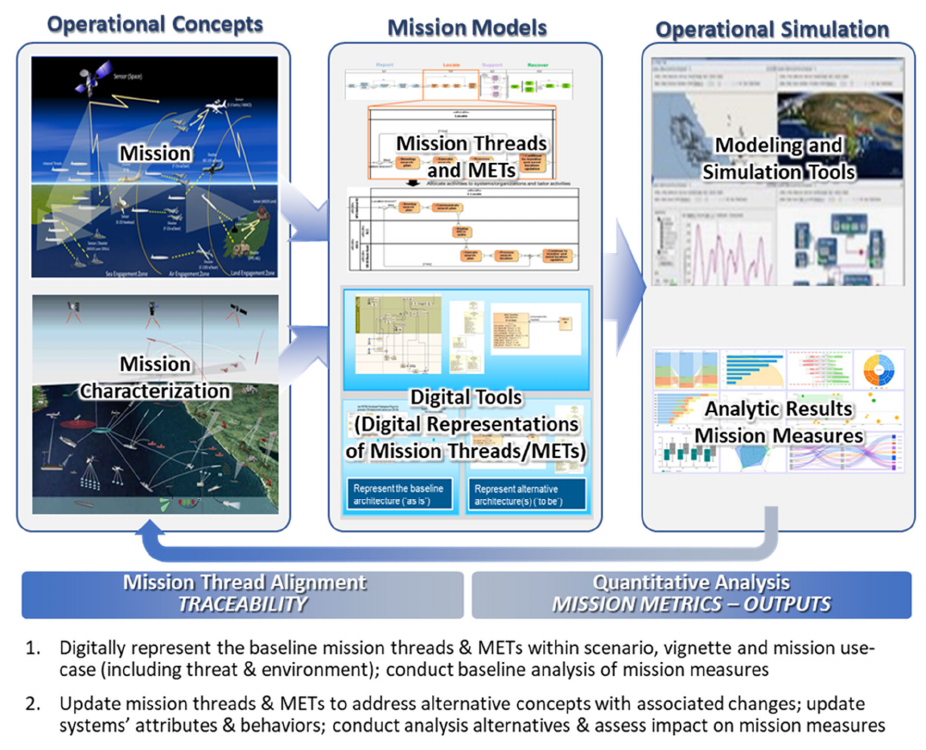

blueprint for further mission engineering activity as depicted in Figure 5-3.

Figure 5-3. Mission architectures are traceable to the mission engineering analysis.

24

6.0 _MISSION ENGINEERING ANALYSIS

The core function of mission engineering analysis is to evaluate mission architectures within the

specific scenario-based mission context to provide quantitative outputs (i.e., measures and metrics)

that explore mission success. The analysis focuses on simulating the behaviors and effects of

executing the mission—the baseline and alternative mission approaches—amid potential

variations in conditions to assess mission impacts.

In conducting analysis of mission architectures, practitioners can benefit from expertise in systems

engineering (e.g., reliability engineering or risk management) and related processes, such as failure

mode and effects analysis, to assess the impact of system or task failure on the overall mission.

Powered by constructive simulations and predicated on operations research, mission engineering

analysis provides quantitative measures and metrics based on mission execution. The results can

inform the refinement and modifications to the mission architectures.

6.1 Complete Design of Analysis

A mission engineering analysis should be designed such that its outputs are provided to address

the purpose statement and answer the investigative questions. Some key aspects of the design of

analysis include:

Development of a run matrix

Identification of the appropriate computational and simulation analysis tools

Refinement of datasets

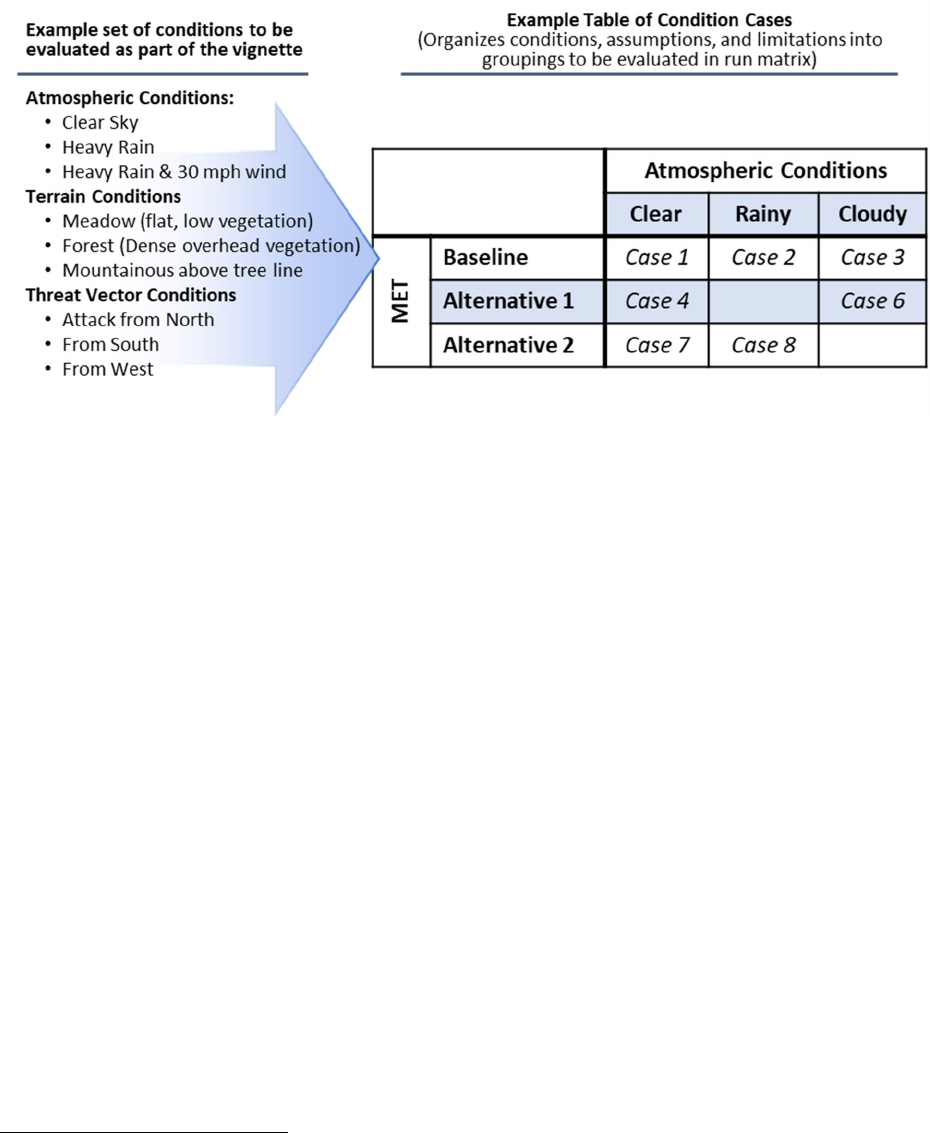

6.1.1 Develop and Organize Evaluation Framework (Run Matrix)

A run matrix is a structured set of mission approaches to be analyzed in a specified mission

scenario or vignette for the mission engineering analysis. The matrix should include the mission

baseline approach and various alternatives for comparison. Based on the questions to be addressed,

excursions should reflect the varying considerations and changing variables within the mission

context and baseline mission approach. The practitioner may consider identifying alternative

approaches to be explored based on known issues or gaps identified from the baseline. These

alternatives are the changes in the METs, described in Section 5.3. The run matrix is a useful way

to plan analysis and inform the selection of analytical approaches.

The primary elements of the run matrix are the mission approaches to be evaluated and the

operational conditions that may impact performance. For each entry in the run matrix, practitioners

should consider the availability of datasets and the fidelity and statistics needed from models and

simulations. Assumptions, limitations, and boundary conditions imposed on the run should be

included. Practitioners should also weigh the analytic methods and modeling tools appropriate to

obtain meaningful results.

Run matrices begin with trials, or excursions, associated with the baseline approach. Practitioners

should validate run matrix with stakeholders and subject matter experts. Boundary conditions,

constraints, and limitations associated with each conducted run should be captured. Practitioners

25

may find a tabular format, such as that shown in Figure 6-1, can be a useful way to summarize and

organize relevant information derived from the run matrix.

Figure 6-1. Illustrative grouping of cases and set-up conditions.

15

6.1.2 Identify Computational Methodology and Simulation Tools

There are many analytical methods to assess the different mission approaches in the run matrix.

Practitioners should select the method most appropriate to the mission engineering purpose and

investigative questions. Potential methods include:

Bayesian analysis

Markov chain

Monte Carlo simulation

Regression analysis

Optimization analysis

Sensitivity analysis

Cost-benefit analysis

Stochastic modeling

Empirical modeling

Parametrization

Some instances of when to apply these methods for implementing mission engineering include

using optimization analysis to help find the best value for one or more variables under certain

constraints; using sensitivity analysis to determine how variables are affected by changes in other

variables; and using parametrization to express system, process, or model states as functions of

15

Author’s note: depending on the mission engineering effort, not all cases may need to be executed.

26

independent variables. The application of these methods can help refine assumptions and inputs

when more than one variable is unknown.

The investigative questions, the measures and metrics, and the selected type of analysis will drive

the practitioner to choose the appropriate analytic tools for modeling and computing the run matrix

to assess mission impacts. As is the case with the selection of analytical methodology, practitioners

should select tools that best support the mission engineering activity. In selecting tools,

practitioners should account for the computational time of each trial, which is determined by the

model and analytic complexity, against the total time allotted for the mission engineering analysis.

Potential tools for mission engineering application include both government-owned and

commercial modeling, simulation, and architecture software. These tools enable the development

of models and the execution of analysis at the strategic, operational, and tactical levels and in

different domains (e.g., maritime, air, land, and space). These tools also enable analysis of different

mission operations––including electromagnetic, cyber, communications, jamming, and non-

kinetic versus kinetic operations—with different degrees of computational rigor, fidelity, or

complexity.

The choice of tools is driven by the complexity of the scenario, vignette, and mission threads.

Other factors influencing the choice of tools include the mission duration and computational

timesteps, the fidelity requirements of the data, and the number of variables feeding the measures

and metrics. The models derived from the selected toolset will handle error propagation (e.g.,

random and systematic) and uncertainty differently. Practitioners should understand the tools and

the relationship between a model and the pedigree of source data necessary to effectively use that

model.

6.1.3 Organize and Review Datasets

Much of the data needed for the analysis has been collected thus far from mission characterization

and the development of mission architectures. The different baseline and alternative options within

the run matrix will structure the analysis and may require additional data to develop models for

the simulation and analysis. Therefore, organizing and reviewing the data collected will help assess

additional data needs for the mission engineering effort. Datasets should come from trustworthy

data sources and be reviewed by subject matter experts to lend credibility to the models,

simulations, and results.

6.2 Execute Models, Simulations, and Analysis

Using the run matrix developed, practitioners should execute the simulation for the baseline and

alternative mission approaches to output results––mission measures and metrics. Depending on

the scope of the mission engineering activity, the baseline mission approach could be executed

before fully defining the run matrix to identify gaps or mission areas with which to focus

alternatives. Once processed and verified, the results will provide quantitative insights describing

the impact of the approaches on mission outcomes.

27

Practitioners should consider the following in the execution of modeling runs:

Executing and analyzing the baseline mission approach

Adjusting the run matrix, as necessary

Executing and analyzing the alternative mission approaches

Validating the analytic findings

6.2.1 Execute and Analyze the Baseline

Practitioners should model the baseline MET within the context of the scenario and vignette using

the tools to simulate and execute the mission. All relevant peoples and organizations, system

attributes, behaviors, and effects should be represented. Practitioners should conduct baseline

trials, as planned in the run matrix. The baseline runs should be critically examined to ensure the

modeled MET has captured known operational behavior and that simulation results match the

judgement of subject matter experts. In most cases, more information is known about the baseline

than the proposed alternative mission approaches, allowing practitioners to more readily check

that the models and analytic tools will deliver results consistent with expectations. Practitioners

should document mission measures and metrics, observations, and assumptions.

6.2.2 Adjust the Run Matrix (as Needed)

If unexpected results or additional gaps are discovered with the baseline output, practitioners

should consider adjusting the run matrix with appropriate alternative METs. It is more efficient to

adjust the run matrix at this stage than to wait until all trials have been run. Once adjustments are

made, practitioners should revalidate the run matrix with subject matter experts.

6.2.3 Execute and Analyze the Alternative Mission Approaches

Practitioners should model the alternative METs within the context of the scenario and vignette

using the chosen tools to simulate and execute the mission. Special cases should be noted,

including when runs do not go as predicted, where statistical convergence is not achieved, and

where models and simulations unexpectedly crash or yield an interesting singularity. Practitioners

should rerun trials where needed and record all encountered anomalies and events occurring within

the runs. Practitioners should capture mission measures and metrics, and observations or

assumptions. Interesting and unexpected results can be the source of excursions, variations on the

mission threads and METs, or further sensitivity analysis around specific parameters. Finally,

practitioners should document results for all entries in the run matrix.

6.2.4 Validate the Fidelity of Analytic Results

Three important considerations in any mission engineering analysis are:

Accuracy—systematic error, random error, anomalies, and artifacts

Precision—error analysis and statistical regression

28

Confidence—the interval, or range of possible values for a given parameter based on a set

of data, e.g., simulation results and the level or probability that the interval contains the

value of the parameter

Before executing additional analyses, practitioners should first evaluate whether the results from

the initial trials yield the fidelity—the accuracy, precision, and confidence—needed to answer the

investigative questions. With actual values now available for review, measures and metrics

identified as important in the design of the analysis may not be as hard-hitting as initially thought.

Other critical measures and metrics might have been overlooked. The aggregation of measures and

metrics should be reviewed to ensure findings will support the conclusions that inform

stakeholders and decision makers.

Practitioners should critique the results for both proper

execution of the analytic methods as well as the

soundness of results. The results should be graphed or

visualized to inspect the output. Practitioners should

assess whether the comparison of baseline and

alternative mission approaches has meaning and to

obtain insights on the impacts of changes to mission outcomes. Example questions that

practitioners should confidently answer include:

Does the comparison of results from the baseline to the alternative mission approach trials

yield quality data sufficient to answer the investigative questions?

Are the results of these trials, i.e., the measured performance of the mission approach,

justifiable and explainable as a narrative and consistent with input from subject matter and

operational experts?

How do the assumptions and constraints for the analysis, impact the interpretation of the

results?

Do the results address the investigative questions in a way that meaningful conclusions can

be drawn?

6.3 Adjust Mission Threads and Mission Engineering Threads

If answers to these questions are inadequate, or if other behaviors of interest are observed, then

practitioners should revisit the cycle of mission thread and MET development. Further adjustments

to the run matrix and trials may need to be made. The cycle follows the following pattern:

Observe the mission

Conject a reason for that observation

Create an alternative mission approach to evaluate that conjecture

Evaluate if the conjected case confirms the observation (i.e., fully answers the investigative

questions)

If the case does not confirm the observation, then repeat the cycle

Practitionersshouldreviewthe

analyticfindingswith

stakeholdersandsubjectmatter

experts.

29

7.0 _R

ESULTS AND

R

ECOMMENDATIONS

The final phase of the mission engineering process comprises three elements: 1) synthesis and

documentation of mission impacts and outcomes obtained from the mission engineering analysis,

2) the capture and presentation of recommended mission architectures, and 3) the curation of

mission engineering artifacts for future use.

The products of mission engineering help focus attention on a set of recommendations associated

with the purpose statement and investigative questions. Recommendations are used to inform

leadership, shape requirements, advise prototyping efforts, and substantiate acquisition decisions.

Recommendations help explain the attributes of recommended mission architectures, reflect the

MOSs as aligned with the original questions and highlight the need for further analyses.

Major mission engineering products include:

Digital models of mission architectures—mission threads and METs

Collection of information on missions, scenarios, and current and future capabilities

Datasets—system performance parameters, models, metrics, and measures

Documented results, findings, and recommendations—visualizations, reports, briefs, digital

artifacts

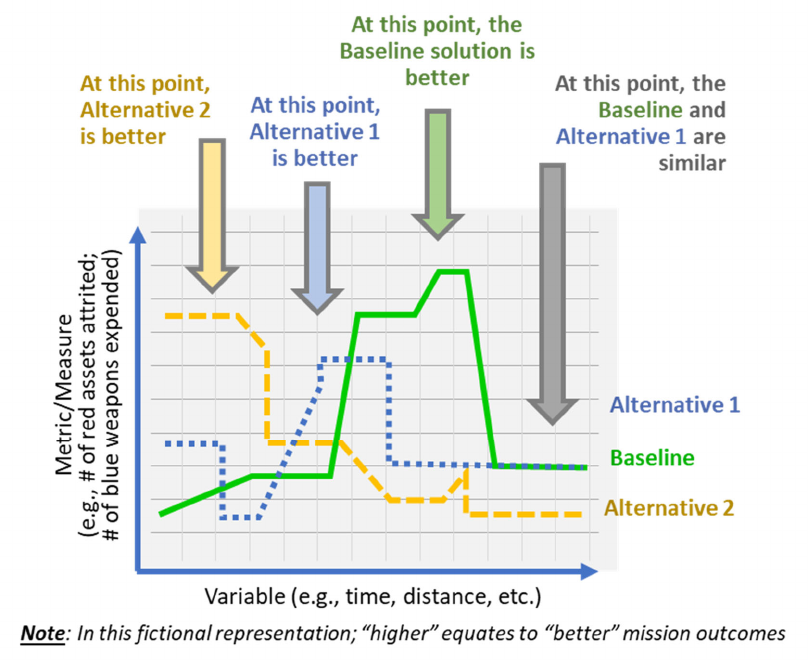

Figure 7-1. Example visualization of results from mission engineering analysis.

30

At a minimum, mission engineering products should document the overall purpose of the analysis

and its planning. The products also should include the influences that drove the selection of an

analytic framework––including assumptions and constraints, tools, models, measures and metrics,

and the results obtained. Practitioners should quantify the gain or loss toward the MOSs and other

key measures and metrics that help address the purpose statement. Identify any secondary, or new,

mission gaps that were discovered or could emerge from an implemented solution. Observed

trends or implications and relationships or correlations deduced from the data should be included.

When documenting the overall mission engineering effort, practitioners should consider the

following outline of desirable points:

State the problem or opportunity, the questions, and the mission

Describe the scenario and vignettes, to include describing the operational environment

Identify blue- (U.S.), red- (adversary), and green- or other non-adversary forces as well as

DOTMLPF-P considerations

Delineate measures and metrics for the mission (MOSs, MOEs)

Describe the mission architectures—the baseline, alternative mission threads and METs

Identify key assumptions and constraints about the mission, technology, or capabilities

Document details of the baseline mission approach and related condition cases

Explain the analytical methodology

Describe the results obtained from the analysis citing the fidelity and credibility of the

models, data, and results

Identify any non-error propagated uncertainties or other issues with the results

Justify or explain the fidelity of the results with a statistical basis

Describe the conclusions from the analysis and discuss how the results address the problem

or opportunity statement

Identify and capture risks in each mission architecture

Recommend actions for decision makers

Recommend further analysis and next steps

31

8.0 _SUMMARY

The mission engineering process helps practitioners decompose missions into constituent parts and

analyze end-to-end mission execution. The process helps to identify and resolve capability gaps

and quantify impacts of alternative mission approaches. The process assesses systems or SoS

within that mission context and enables exploration of trade-space opportunities across that

mission.

The mission engineering process can help decision makers align resources to desired mission

outcomes by identifying the most promising potential materiel or non-materiel solutions and

opportunities. The goal of mission engineering is to engineer missions by identifying the right

things—the technologies, systems, SoS, or processes—to achieve the intended mission outcomes;

and provide mission-based inputs into the systems engineering process to aid the Department in

building things right.

The mission engineering process is scalable to the problem or opportunity under evaluation, the

availability of data, and decisional needs. This flexible, iterative methodology allows analysis to

improve as information is gained throughout modeling and simulation runs, providing traceability

to data sources, assumptions, and constraints.

Mission engineering uses mission architectures to analyze the design and integration of systems,

SoS, and emergent capabilities within the context of a particular operational scenario and

vignette to yield desired mission outcomes. The results of mission engineering analyses inform

decision makers on potential trade-spaces in resource allocation to ensure the Warfighters will

have the capabilities, technologies, and systems they need to successfully execute their missions.

Simultaneously, mission engineering informs the evolution of requirements, system design, and

capability development via performance measures.

32

9.0 _APPENDIX

9.1 Mission Engineering Glossary

Alternative Mission Approach: A change to the baseline mission approach for how the mission

will be executed. (OUSD(R&E))

Assumption: A specific supposition of the operational environment that is assumed true, in the

absence of positive proof, essential for the continuation of planning. (JP 5-0, Department of

Defense Dictionary)

Baseline Mission Approach: The agreed upon starting point for how the mission will be

executed to address the mission engineering effort; driven by the mission, scenario, and epoch.

(OUSD(R&E))

Blue Force: U.S. combatants. (OUSD(R&E))

Capability: The ability to complete a task or execute a course of action under specified

conditions and level of performance. (CJCSI 5123.01H, DAU Glossary)

Concept of Operations (CONOPS): A verbal or graphic statement that clearly and concisely

expresses what the commander intends to accomplish and how it will be done using available

resources. (JP 5-0, Department of Defense Dictionary)

Constraint: In the context of planning, a requirement placed on the command by a higher

command that dictates an action, thus restricting freedom of action (JP 5-0 Department of

Defense Dictionary). Constraints may also refer to the range of permissible states for an object

(Department of Defense CIO architecture Framework)

Data Curation: The ongoing processing and maintenance of data throughout its lifecycle to

ensure long-term accessibility, sharing, and preservation. (National Library of Medicine)

Digital Engineering: Digital engineering is an integrated digital approach using authoritative

sources of system data and models as a continuum throughout the development and life of a

system. (OUSD(R&E))

Epoch: A time period of static context and stakeholder expectations, similar a snapshot of a

potential future. For acquisition planning, three epochs are usually defined: 1) near term––up to

two years into the future 2) FYDP (Future Years Defense Program), up to five years into the

future, and 3) beyond the FYDP, 5–10 years into the future. (Naval Postgraduate School; MIT)

Fidelity: A measure of the accuracy, precision, and statistical confidence to which the data,

result, etc. represents the state and behavior of a real-world object or the perception of a real-

world object, feature, condition, or chosen standard in a measurable or perceivable manner.

Green Force: Allied combatants. (OUSD(R&E))

33

Kill Chain: A Mission Thread with a kinetic outcome. Dynamic targeting procedures often

referred to as F2T2EA by air and maritime component forces; and Decide, Detect, Deliver, and

Assess methodology by land component forces. (JP 3-09)

Kill Web: An inclusive set of multiple integrated Mission Threads and METs for the applicable

scenario or vignette of interest. (OUSD(R&E))

Measure: The empirical, objective, numeric quantification of the amount, dimensions, capacity,

or attributes of an object, event, or process that can be used for comparison against a standard or

similar entity or process. (AFOTECMAN 99-101; Science Direct)

Measure of Effectiveness (MOE): Measurable military effects or target values for success that

are derived from executing tasks and activities to achieve the MOS. (OUSD(R&E))

Measure of Performance (MOP): Measurable performance characteristics or target parameters

of systems or actors used to carry out the mission tasks or military effect. (OUSD(R&E))

Measure of Success (MOS): Measurable attributes or target values for success within the

overall mission in an operational environment. Measures of success are typically driven by the

mission objectives of the blue force). (OUSD(R&E))

Metric: a unit of measure that coincides with a specific method, procedure, or analysis (e.g.,

function or algorithm). Examples include mean, median, mode, percentage, and percentile.

(AFOTECHMAN 99-101)

Mission: The task, together with the purpose, that clearly indicates the action to be taken and the

reasoning behind the mission. (JP 1-02)

Mission Architecture: A view or representation that depicts the ways and means to execute a

specific end-to-end mission, with relationships and dependencies amongst mission elements.

This includes elements such as mission activities, approaches, systems, systems of systems,

organizations, and capabilities. (OUSD(R&E))

Mission Characterization: The aggregate of factors associated with military objectives and

operations; this includes the mission to be accomplished in a specific time and place, the

measures of success, the threats, and constraints. Changes in any factors of the mission

characterization may cause the mission to be redefined. (OUSD(R&E))

Mission Context: The elements that describe who, what, when, where, and why elements of the

mission to be accomplished. Changes in any elements of the mission context may cause the

mission to be redefined. (OUSD(R&E))

Mission Element: A person, organization, platform, and/or system that performs a task.

(OUSD(R&E))

Mission Engineering: An interdisciplinary process encompassing the entire technical effort to

analyze, design, and integrate current and emerging operational needs and capabilities to achieve

desired mission outcomes. (OUSD(R&E))

34

Mission Engineering Analysis: The approach to evaluate mission architectures within the

specific scenario-based mission context to provide quantitative outputs that explore mission

impacts. (OUSD(R&E))

Mission Engineering Thread (MET): Mission threads that include the details of the

capabilities, technologies, systems, and organizations required to execute the mission.

(OUSD(R&E))

Mission Tasks: A clearly defined action or activity specifically assigned to a system, individual

or organization that must be complete. (Adapted from JP-01).

Mission Thread: A sequence of end-to-end mission tasks, activities, and events presented as a

series of steps to achieve a mission. (OUSD(R&E))

Model: A physical, mathematical, or otherwise logical representation of a system, entity,

phenomenon, or process. (DoDI 5000.61, DoDI 5000.70) per MSE. Per the Systems Engineering

Body of Knowledge, Models are often categorized as Descriptive, Analytic, etc. (Systems

Engineering Body of Knowledge)

Operations: 1. A sequence of tactical actions with a common purpose or unifying theme. (JP 1)

2. A military action or the carrying out of a strategic, operational, tactical, service, training, or

administrative military mission. (JP 3-0, Department of Defense Dictionary) There are

Operations–sequences of tactical actions with a common purpose or unifying theme; Major

Operations–series of tactical actions to achieve strategic or operational objectives; and

Campaigns–series of related major operations aimed at achieving strategic and operational

objectives within a given time and space. (JP-1)

Planning, Programming, Budgeting, and Execution (PPBE) Process: The primary resource

allocation process of Department of Defense. (DAU Glossary)

Red Force: Adversary combatants. (OUSD(R&E))

Scenario: Description of the geographical location and timeframe of the overall conflict. A

scenario includes information such as threat and friendly politico-military contexts and

backgrounds, assumptions, constraints, limitations, strategic objectives, and other planning

considerations. (OUSD(R&E))

Sensitivity Analysis: Determines how different values of an independent variable affect a

particular dependent variable under a given set of assumptions. (Investopedia website,

https://www.investopedia.com/terms/s/sensitivityanalysis.asp)

Tactical: The level of employment, ordered arrangement, and directed actions of forces in

relation to each other, to achieve military objectives assigned to tactical units or task forces

(TFs). (Adapted from JP 3-0, Chapter 1, 6.d.)

Threat: The sum of the potential strengths, capabilities, and strategic objectives of any

adversary that can limit U.S. mission accomplishment or reduce force, system, or equipment

effectiveness. The threat does not include (a) natural or environmental factors affecting the

35

ability or the system to function or support mission accomplishment, (b) mechanical or

component failure affecting mission accomplishment unless caused by adversary action, or (c)

program issues related to budgeting, restructuring, or cancellation of a program. (DAU Glossary,

CJCSI 5123.01H)

Verification: The process of determining that a model or simulation implementation and its

associated data accurately represent the developer's conceptual description and specifications.

(JP 3-13.1, Department of Defense Dictionary)

Validation: The process of determining the degree to which a model or simulation and its